Revolutionizing Robotics: MIT Introduces Self-Aware Robots That Learn Through Vision

2025-07-24

Author: Rajesh

A New Era for Robots: Learning Through Sight

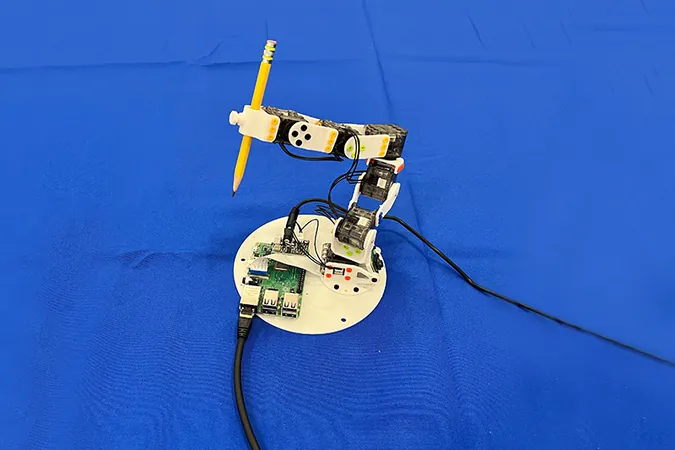

In the heart of MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL), a captivating innovation is taking shape: a soft robotic hand that learns to grasp objects, not through intricate sensors or programming, but by simply watching itself with a single camera. This groundbreaking system, termed Neural Jacobian Fields (NJF), provides robots with an unprecedented sense of bodily self-awareness.

Published in Nature on June 25, this research signifies a pivotal shift. As Sizhe Lester Li, the lead researcher and MIT PhD student, highlights, "Instead of programming robots for specific tasks, we're teaching them to learn and adapt autonomously, which could revolutionize robotics as we know it."

Revolutionizing Control: From Rigid to Adaptive Systems

Traditionally, robotics has relied on complex hardware and extensive coding. However, NJF redefines the playing field by enabling robots to develop their understanding of movement from visual data alone, without relying on rigid, sensor-heavy designs. This new approach opens doors to a broader range of robotic applications, particularly in softer, more flexible designs.

As Li explains, "Just as we learn to control our fingers through observation and adaptation, our system does the same for robots. It engages in random actions and learns how to manipulate its own body based on visual feedback."

Testing the Limits: Versatile Applications of NJF

CSAIL researchers rigorously tested NJF across various robotic forms, including soft pneumatic hands, rigid robotic hands, and even non-sensor-equipped platforms. Each time, the system successfully learned the robots' shapes and how they responded to control commands through mere observation.

The implications are staggering: in the future, NJF-equipped robots could execute precise agricultural tasks, operate in construction environments without a web of sensors, or navigate complex spaces where traditional methods struggle.

The Technology Behind the Magic

At its core, NJF integrates an advanced neural network capable of deciphering a robot's three-dimensional shape and its responses to commands. Drawing from the principles of neural radiance fields (NeRF), NJF learns the geometry of the robot and creates a Jacobian field to predict movements based on motor inputs.

By training through random movements captured by cameras, robots gain the ability to deduce their motion dynamics independently—no prior programming required.

The Future of Robotics: Accessible and Adaptive

As robotics shifts away from rigid designs, NJF paves the way for more accessible technologies. The vision-based system allows robots to function dynamically in unpredictable environments, capitalizing on the reliability of vision rather than costly sensors.

"Vision provides essential cues for robots to localize and control themselves without GPS or extensive external systems, enabling adaptability in everything from drones navigating indoors to legged robots crossing challenging terrain," says co-author Daniela Rus, a prominent MIT professor.

Looking Ahead: Simplifying Robotics for Everyone

Currently, NJF requires extensive initial training with multiple cameras and specific robot models, but researchers envision a more user-friendly future. Imagine this: hobbyists recording their robots' movements with a simple smartphone to create personalized control models—no elaborate setups necessary.

The NJF doesn't yet generalize across different robot types, nor does it possess tactile sensing for contact-rich tasks, yet the team is actively pursuing improvements to empower robots with better adaptability and understanding.

Li encapsulates the essence of this breakthrough: "NJF sparks a new foundation for robotics, granting machines an intuition about their movements, moving us closer to a world where robots can learn and grow, just like us."

Brasil (PT)

Brasil (PT)

Canada (EN)

Canada (EN)

Chile (ES)

Chile (ES)

Česko (CS)

Česko (CS)

대한민국 (KO)

대한민국 (KO)

España (ES)

España (ES)

France (FR)

France (FR)

Hong Kong (EN)

Hong Kong (EN)

Italia (IT)

Italia (IT)

日本 (JA)

日本 (JA)

Magyarország (HU)

Magyarország (HU)

Norge (NO)

Norge (NO)

Polska (PL)

Polska (PL)

Schweiz (DE)

Schweiz (DE)

Singapore (EN)

Singapore (EN)

Sverige (SV)

Sverige (SV)

Suomi (FI)

Suomi (FI)

Türkiye (TR)

Türkiye (TR)

الإمارات العربية المتحدة (AR)

الإمارات العربية المتحدة (AR)