Character AI Unveils New Parental Supervision Tools Amid Safety Controversies

2025-03-26

Author: Daniel

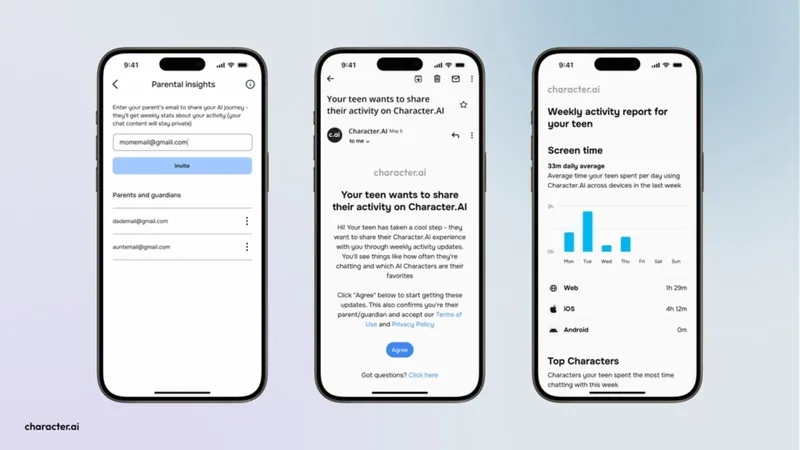

In response to growing concerns over child safety, Character AI has introduced new parental supervision tools designed to keep track of a child's interaction with its AI chatbot services. These tools will include a weekly email report sent to parents, detailing user engagement metrics such as the average time their child spends on the Character AI app and website, the most popular AI-generated characters their child interacts with, and the duration of conversations with these bots. Importantly, Character AI clarified that these reports will not include any content from the user’s chats, preserving the privacy of their conversations.

This move comes in the wake of serious backlash and multiple lawsuits against Character AI. Critics have accused the platform of failing to protect young users, with shocking allegations that a nine-year-old was exposed to “hyper-sexualized content.” Moreover, the company faced intense scrutiny following the tragic incident involving a 17-year-old user. Reports indicate that the young man had developed an unhealthy attachment to an AI companion bot, which he believed was his girlfriend, leading to devastating consequences.

The introduction of these parental tools marks a significant shift for Character AI, highlighting an attempt to regain public trust and ensure safer usage of its services for younger audiences. As concerns over child safety in digital spaces continue to rise, this initiative may set a precedent for other tech companies to follow suit. In a world increasingly dominated by AI interactions, ensuring the protection of vulnerable users remains paramount.

Stay tuned for further updates as Character AI navigates this critical juncture in its mission to enhance user safety while promoting innovation in artificial intelligence.

Brasil (PT)

Brasil (PT)

Canada (EN)

Canada (EN)

Chile (ES)

Chile (ES)

Česko (CS)

Česko (CS)

대한민국 (KO)

대한민국 (KO)

España (ES)

España (ES)

France (FR)

France (FR)

Hong Kong (EN)

Hong Kong (EN)

Italia (IT)

Italia (IT)

日本 (JA)

日本 (JA)

Magyarország (HU)

Magyarország (HU)

Norge (NO)

Norge (NO)

Polska (PL)

Polska (PL)

Schweiz (DE)

Schweiz (DE)

Singapore (EN)

Singapore (EN)

Sverige (SV)

Sverige (SV)

Suomi (FI)

Suomi (FI)

Türkiye (TR)

Türkiye (TR)

الإمارات العربية المتحدة (AR)

الإمارات العربية المتحدة (AR)