Revolutionizing Quantum Machine Learning: Gaussian Processes Are the Key!

2025-08-04

Author: Liam

Neural networks have transformed classical machine learning, powering innovations like self-driving cars and advanced AI applications. But transferring that magic to quantum computers has proven challenging, with researchers facing unexpected hurdles. Until now!

A breakthrough has emerged from Los Alamos National Laboratory, where a team has introduced a groundbreaking approach that harnesses Gaussian processes for quantum computing. Lead scientist Marco Cerezo stated, "Our goal was to demonstrate that genuine quantum Gaussian processes exist, a leap that could ignite fresh innovations in quantum machine learning."

The Science Behind the Breakthrough

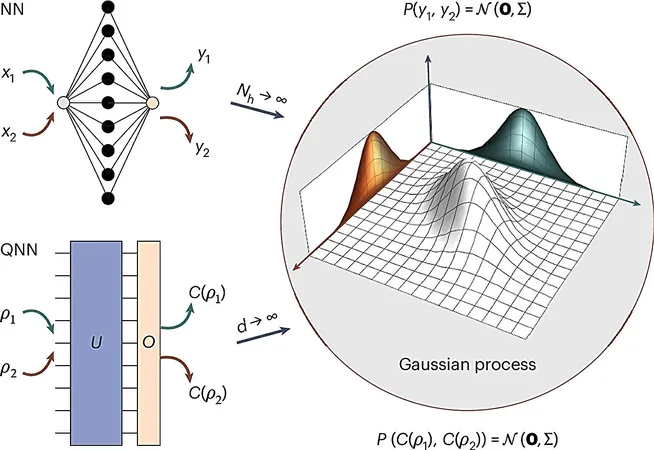

The revolution began when researchers realized that large neural networks could be modeled as Gaussian processes. Every neural network comprises millions of 'neurons'—mathematical nodes that make predictions based on the input data. Though these predictions start as random and unbiased, the collective results align along a Gaussian or bell curve, allowing for averaged conclusions.

For the first time, the Los Alamos team proved that this Gaussian behavior could also apply to specific quantum computing processes. This finding could dramatically enhance quantum computing's potential!

Overcoming Challenges in Quantum Neural Networks

Neural networks are classified as "parametric models," adjusting variational parameters to learn. Scientists initially sought to adapt these models for quantum computing to tackle complex challenges unmanageable by classical systems. However, problems arose, notably 'barren plateaus' that created mathematical dead ends.

Martin Larocca, a quantum algorithms expert at the Lab, explained, "We were trying to copy classical neural networks without fully understanding their limitations in the quantum realm. This realization pushed us to develop simpler, more effective learning models."

Why Gaussian Processes Are the Future

Unlike their parametric counterparts, Gaussian processes sidestep many of these challenges but do have limitations. Their efficacy hinges on the data conforming to a bell curve; when it doesn't, predictions can falter. To navigate this, the team employed sophisticated mathematical tools to validate their method, confirming its suitability for processing quantum datasets.

Diego Garcia-Martin, the paper's lead author, described their breakthrough as the "Holy Grail of Bayesian learning." He elaborated, "Imagine predicting housing prices based on initial Gaussian assumptions; as we gather more data, our predictions become increasingly accurate. This principle can now extend into quantum computing, amplifying its predictive power!"

A New Frontier in Quantum Machine Learning

The quest to replicate neural network capabilities in the quantum realm is longstanding. This paper marks a significant milestone, demonstrating this potential mathematically for the first time. Given the infancy of quantum technology, much of this research remains theoretical, laying the groundwork for powerful machine learning models to tackle some of humanity's most daunting problems once quantum computing matures.

Cerezo emphasized, "We aim to inspire researchers to explore innovative paths in quantum machine learning, rather than forcing classical models where they don't fit. The future lies in new methodologies that align more with the unique characteristics of quantum systems!"

Brasil (PT)

Brasil (PT)

Canada (EN)

Canada (EN)

Chile (ES)

Chile (ES)

Česko (CS)

Česko (CS)

대한민국 (KO)

대한민국 (KO)

España (ES)

España (ES)

France (FR)

France (FR)

Hong Kong (EN)

Hong Kong (EN)

Italia (IT)

Italia (IT)

日本 (JA)

日本 (JA)

Magyarország (HU)

Magyarország (HU)

Norge (NO)

Norge (NO)

Polska (PL)

Polska (PL)

Schweiz (DE)

Schweiz (DE)

Singapore (EN)

Singapore (EN)

Sverige (SV)

Sverige (SV)

Suomi (FI)

Suomi (FI)

Türkiye (TR)

Türkiye (TR)

الإمارات العربية المتحدة (AR)

الإمارات العربية المتحدة (AR)