AI Breakthrough: New Tool Flags Over 1,000 'Questionable' Journals!

2025-08-30

Author: Michael

Revolutionizing Research Integrity with AI

In a groundbreaking development, a team of computer scientists from the University of Colorado Boulder has created an innovative artificial intelligence platform dedicated to identifying dubious scientific journals. This tool addresses a disturbing trend in the research community that could undermine the integrity of scientific publishing.

The Rising Threat of 'Predatory' Journals

Daniel Acuña, the lead author and associate professor in the Department of Computer Science, regularly receives unsolicited emails from unknown journal editors offering to publish his papers for a hefty fee. These so-called 'predatory journals' exploit researchers, enticing them with promises of publication that often come without any real peer review or quality assurance.

"There has been a growing effort among scientists and organizations to vet these journals," says Acuña. "But it's like a game of whack-a-mole—once one is flagged, another pops up, often under a new name and website."

AI Takes on the Challenge of Journal Vetting

His team's cutting-edge AI tool not only automates the screening process but also evaluates critical criteria to determine the legitimacy of journals. It scrutinizes whether the journals have credible editorial boards and checks for glaring grammatical errors on their websites.

While Acuña admits the AI isn't flawless and believes human experts should have the final say, he emphasizes the urgency of curbing questionable publications, especially as skepticism about scientific integrity grows.

The Weight of Research on Shaky Foundations

In the realm of science, previous research forms the bedrock for new discoveries. Acuña warns, "If the foundation crumbles, the whole structure collapses." With increasing pressures on researchers—especially in developing countries like China, India, and Iran—predatory journals are thriving by exploiting the need to publish.

A New Hope for Research Publication Standards

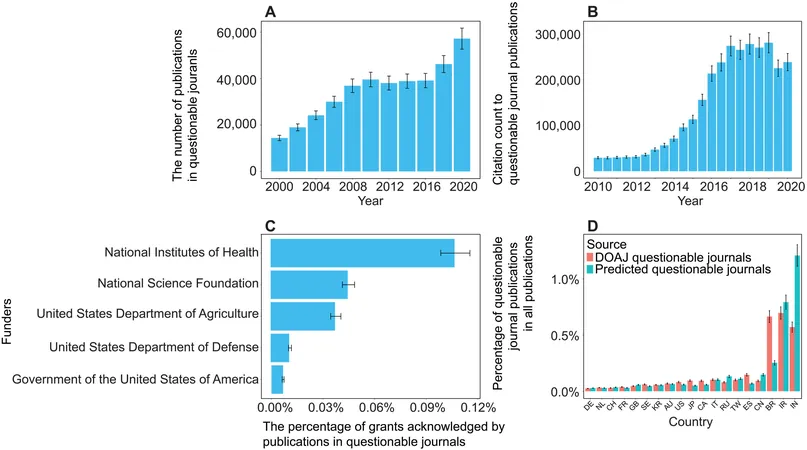

To mitigate this issue, Acuña's team leveraged data from the Directory of Open Access Journals (DOAJ) and scanned a massive list of around 15,200 open-access journals. Initially, the AI flagged over 1,400 journals as potentially problematic. A panel of human experts further reviewed these suspicions, revealing an estimated 1,000 journals that warranted concern.

Acuña envisions the AI tool as a first line of defense in pre-screening journals, while final vetting should remain in human hands. Unlike other opaque AI platforms, this system is designed to be transparent, providing clear insights into its evaluation criteria.

The Future of Safe Scientific Publishing

Though not yet publicly available, the researchers aim to distribute the AI to universities and academic publishers soon. Acuña describes the initiative as a vital 'firewall for science'—an essential layer of protection against misleading data.

Drawing a parallel to smartphone software updates, he adds, "Just as we anticipate bug fixes, the scientific community must be proactive about maintaining the integrity of our research foundations."

Brasil (PT)

Brasil (PT)

Canada (EN)

Canada (EN)

Chile (ES)

Chile (ES)

Česko (CS)

Česko (CS)

대한민국 (KO)

대한민국 (KO)

España (ES)

España (ES)

France (FR)

France (FR)

Hong Kong (EN)

Hong Kong (EN)

Italia (IT)

Italia (IT)

日本 (JA)

日本 (JA)

Magyarország (HU)

Magyarország (HU)

Norge (NO)

Norge (NO)

Polska (PL)

Polska (PL)

Schweiz (DE)

Schweiz (DE)

Singapore (EN)

Singapore (EN)

Sverige (SV)

Sverige (SV)

Suomi (FI)

Suomi (FI)

Türkiye (TR)

Türkiye (TR)

الإمارات العربية المتحدة (AR)

الإمارات العربية المتحدة (AR)