Unlocking the Secrets of Protein Language Models: A Game Changer for Drug Discovery

2025-08-19

Author: Ming

A New Era for Protein Research

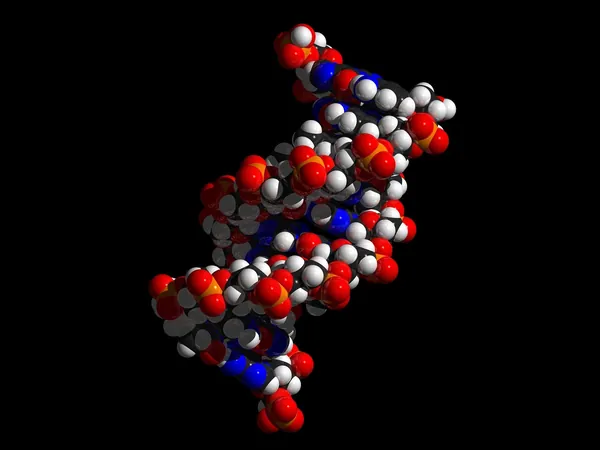

In recent years, sophisticated models designed to predict protein structures and functions have become indispensable in the biotech field, revolutionizing how scientists identify drug targets and develop therapeutic antibodies. These models, known as protein language models (PLMs), leverage the power of large language models (LLMs) to make remarkably accurate predictions. However, until now, the mechanisms underlying these predictions have remained a mystery, raising questions about which specific protein features most influence their outputs.

Peering into the 'Black Box' of AI

A groundbreaking study from MIT aims to demystify these enigmatic models. Researchers have applied a novel technique to peek inside the so-called "black box," shedding light on the key protein features that influence prediction outcomes. Understanding these internal workings could dramatically improve the selection of models for various tasks, ultimately streamlining the identification of new drugs and vaccine targets. Bonnie Berger, a leading figure in the study, noted, "Our work has broad implications for enhanced explainability in downstream tasks that rely on these representations."

The Evolution of Protein Language Models

The journey began in 2018 when Berger, along with her former colleague Tristan Bepler, introduced the first protein language model, spurring advancements like AlphaFold and other models such as ESM2 and OmegaFold. These cutting-edge tools can process vast sequences of amino acids, akin to how LLMs analyze text, to predict protein functions and structures. Their applications extend beyond mere predictions, aiding in the identification of proteins that may bind to specific drugs.

Decoding Neural Networks

Historically, the complexity of protein language models made it challenging to ascertain how they arrive at their predictions. According to Berger, the models produced valuable insights, yet their internal logic remained elusive. This new study steps into uncharted territory by employing a sparse autoencoder algorithm—an innovative approach that allows researchers to interpret the representations of proteins within neural networks. Unlike traditional models that constrain information to a limited number of neurons, a sparse autoencoder expands the representation to thousands of nodes, facilitating a clearer understanding of the features encoded by each node.

Harnessing AI for Analysis

Following this expansive representation, the researchers turned to an AI assistant named Claude to analyze thousands of protein representations. By correlating these with known protein features—such as molecular functions, protein families, and cellular locations—Claude could demystify which nodes corresponded to specific functions. This capability transforms complex neural network outputs into comprehensible insights, allowing researchers to decode specific functions, such as proteins involved in ion transport located in the plasma membrane.

The Future of Biological Insights

As researchers unlock the layers of these protein models, the potential for groundbreaking biological discoveries expands. Understanding what features are encoded in particular models can guide scientists in selecting the most appropriate model for specific tasks and refining input data to optimize outcomes. Gujral expressed optimism, suggesting that as these models continue to evolve, they could lead to unprecedented biological revelations, fundamentally changing our understanding of proteins.

In essence, the findings from MIT not only illuminate the critical features tracked by protein language models but also pave the way for more transparent and interpretable AI in the life sciences.

Brasil (PT)

Brasil (PT)

Canada (EN)

Canada (EN)

Chile (ES)

Chile (ES)

Česko (CS)

Česko (CS)

대한민국 (KO)

대한민국 (KO)

España (ES)

España (ES)

France (FR)

France (FR)

Hong Kong (EN)

Hong Kong (EN)

Italia (IT)

Italia (IT)

日本 (JA)

日本 (JA)

Magyarország (HU)

Magyarország (HU)

Norge (NO)

Norge (NO)

Polska (PL)

Polska (PL)

Schweiz (DE)

Schweiz (DE)

Singapore (EN)

Singapore (EN)

Sverige (SV)

Sverige (SV)

Suomi (FI)

Suomi (FI)

Türkiye (TR)

Türkiye (TR)

الإمارات العربية المتحدة (AR)

الإمارات العربية المتحدة (AR)