Unlocking the Future of AI: Fine-Tuning vs. In-Context Learning for Powerful Language Models

2025-05-10

Author: John Tan

The Showdown: Fine-Tuning vs. In-Context Learning

In the fast-evolving world of artificial intelligence, customizing large language models (LLMs) is essential for tackling real-world tasks. Two popular methods have emerged: fine-tuning and in-context learning (ICL). Recent groundbreaking research from Google DeepMind and Stanford University has unveiled new insights into these techniques that promise to reshape how developers approach LLM applications.

Understanding the Techniques: How Do They Work?

Fine-tuning is like fine-tuning a musical instrument: it takes a pre-trained LLM and adjusts its internal parameters by training it on a specialized dataset. This allows the model to learn new knowledge tailored to specific tasks. Conversely, in-context learning (ICL) doesn't alter the model's parameters. Instead, it provides examples directly in the input prompt, enabling the LLM to derive solutions from those examples.

A Rigorous Comparison: Who Comes Out on Top?

To determine which method excels at generalizing to new tasks, researchers designed controlled synthetic datasets, consisting of imaginative structures like fictional family trees. They ensured that the terms used were novel, thus testing the model's real learning capabilities without preconceived biases.

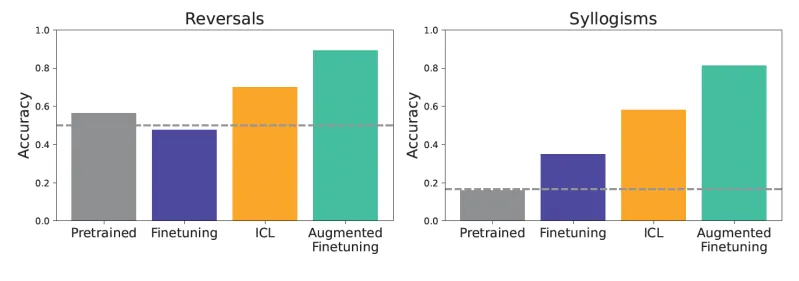

Criteria for testing included simple reversals and logical deductions, where models had to infer relationships and facts. In head-to-head evaluations, ICL consistently outperformed standard fine-tuning, showcasing superior generalization in tasks that required nuanced reasoning and deduction.

The Trade-Off Dilemma

While ICL demonstrated remarkable generalization, it comes at a cost—inference processes can become computationally expensive. As Andrew Lampinen, a researcher at Google DeepMind, highlighted, ICL might save on initial training costs, but it requires more resources during operation. This creates a pivotal trade-off for developers.

Innovating for the Future: A Hybrid Approach

Recognizing ICL's strengths, researchers proposed an innovative method to enhance fine-tuning: integrating ICL-generated examples into fine-tuning datasets. This hybrid strategy aims to bolster fine-tuning by incorporating diverse, nuanced inferences.

Exploring Data Augmentation Strategies

Two primary strategies emerged from this research: 1. **Local Strategy**: This involves generating single inferences by rephrasing or reversing individual sentences based on the training data. 2. **Global Strategy**: Here, the full dataset serves as context, allowing the LLM to create long links between established facts for deeper reasoning.

Amazing Outcomes: Augmented Fine-Tuning

The results were impressive: models fine-tuned on the ICL-augmented datasets significantly outperformed both traditional fine-tuning and plain ICL. This means that companies could derive more accurate, contextually relevant responses, enhancing their LLM applications.

Future Implications: A Smart Investment for Enterprises

This pioneering research suggests that investing in ICL-augmented datasets could yield substantial benefits for businesses. Although augmented fine-tuning might add extra costs, it provides a lasting solution by improving generalization over time and reducing the necessity for costly context every time the model is deployed.

The Road Ahead

While this research opens new avenues for customizing LLMs, further studies are still needed. The findings indicate a compelling case for enterprises to explore augmented fine-tuning when facing challenges with traditional fine-tuning alone. Ultimately, the quest to decipher how models learn and adapt is just beginning, setting the stage for revolutionary advances in AI technology.

Brasil (PT)

Brasil (PT)

Canada (EN)

Canada (EN)

Chile (ES)

Chile (ES)

Česko (CS)

Česko (CS)

대한민국 (KO)

대한민국 (KO)

España (ES)

España (ES)

France (FR)

France (FR)

Hong Kong (EN)

Hong Kong (EN)

Italia (IT)

Italia (IT)

日本 (JA)

日本 (JA)

Magyarország (HU)

Magyarország (HU)

Norge (NO)

Norge (NO)

Polska (PL)

Polska (PL)

Schweiz (DE)

Schweiz (DE)

Singapore (EN)

Singapore (EN)

Sverige (SV)

Sverige (SV)

Suomi (FI)

Suomi (FI)

Türkiye (TR)

Türkiye (TR)

الإمارات العربية المتحدة (AR)

الإمارات العربية المتحدة (AR)