Unlock the Future of AI: Mastering LLM Agents with MCP-RL and ART

2025-08-09

Author: Sarah

Introduction

Imagine a world where large language models (LLMs) seamlessly interact with complex, real-world ecosystems. Enter the Model Context Protocol (MCP)—an innovative framework that enables LLMs to connect with various external systems like APIs, databases, and more without endlessly relying on tedious custom code. While this concept sounds revolutionary, the challenge of effectively utilizing these tools across multi-step tasks has persisted. But all that is about to change!

What Is MCP-RL?

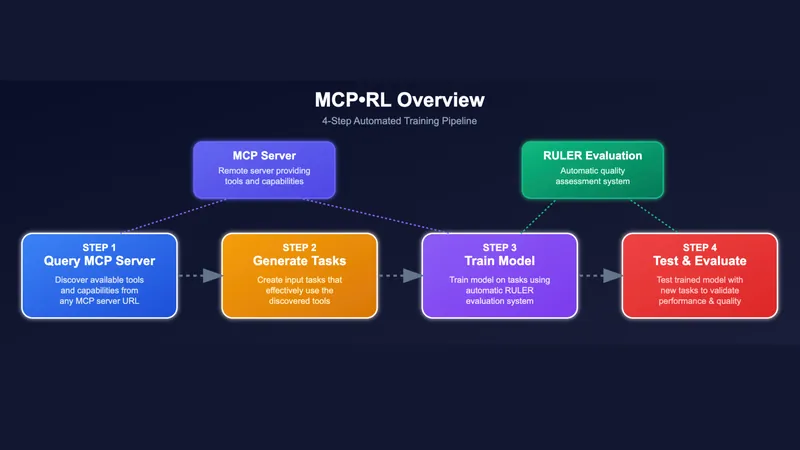

MCP-RL, the groundbreaking reinforcement learning (RL) protocol, empowers any LLM agent to autonomously adapt to MCP servers. This cutting-edge technology is a game-changer, providing LLMs the capability to discover, learn, and master any available tools with a simple URL. Here's what it brings to the table: - **Instant Tool Discovery:** The agent introspects the server to uncover available functions, APIs, and schemas. - **Dynamic Task Creation:** Synthetic tasks are generated in real time, tailored to leverage the tools at its disposal. - **Performance Benchmarking:** A novel scoring system (RULER) evaluates agent performance without the need for labeled data. - **Continuous Optimization:** Agents iteratively refine their skills to ensure maximum efficiency.

Introducing ART: The Agent Reinforcement Trainer

The magic behind MCP-RL lies in ART, the Agent Reinforcement Trainer, which orchestrates a smooth RL pipeline compatible with popular models like Qwen and Llama. With ART, you can expect: - **Decoupled Infrastructure:** Inference and training processes are separated, enabling easier management of agent training and performance evaluation. - **Easy Integration:** ART can be seamlessly incorporated into existing systems with minimal disruption. - **Advanced Fine-Tuning:** Using the GRPO algorithm, ART enhances stability and training efficiency without requiring labeled datasets.

Code Walkthrough: Transforming LLMs with MCP-RL

A sneak peek into ART's capabilities reveals how straightforward it is to specialize LLMs: - **Automated Task Generation:** No need for human input—the system creates tasks that exploit the tools available. - **Effective Execution:** Agents perform tool calls and track their interactions to gather insights. - **Innovative Scoring with RULER:** This adaptive scoring mechanism assesses performance dynamically, encouraging growth in challenging environments.

How MCP-RL Redefines Versatility

MCP-RL revolutionizes LLMs by allowing them to: - **Discover Tools Automatically:** Gain insights into API calls without needing domain-specific knowledge. - **Generate Robust Scenarios:** Use templates or prompts to create relevant tasks that simulate both simple and intricate use cases. - **Adapt to Real-World Challenges:** Once agents grasp synthetic scenarios, they transition smoothly to tackle real-life user requirements.

Real-World Applications and Impact

MCP-RL provides benefits that translate into real-world advantages: - **Quick Deployment:** Compatible with any MCP server, no proprietary access is required. - **Broad Applications:** Train agents to handle diverse tasks ranging from weather updates to data analysis. - **Exceptional Performance:** Achieved state-of-the-art results in two-thirds of public benchmarks, surpassing existing specialist agents.

Practical Integration and Versatility

Getting started is as simple as: - **Installation:** Easy setup using `pip install openpipe-art`. - **Flexible Deployment:** Utilize either local or cloud environments and integrate with leading backends. - **Customization Options:** Advanced users can modify various components to suit their specific needs.

Conclusion

With the advent of MCP-RL and ART, the dream of an autonomous, self-improving agent harnessing LLMs is now a reality. Say goodbye to cumbersome training processes and hello to a future where agents learn organically—whether navigating public APIs or custom enterprise environments. The potential for scalable, reliable performance is just a click away!

Brasil (PT)

Brasil (PT)

Canada (EN)

Canada (EN)

Chile (ES)

Chile (ES)

Česko (CS)

Česko (CS)

대한민국 (KO)

대한민국 (KO)

España (ES)

España (ES)

France (FR)

France (FR)

Hong Kong (EN)

Hong Kong (EN)

Italia (IT)

Italia (IT)

日本 (JA)

日本 (JA)

Magyarország (HU)

Magyarország (HU)

Norge (NO)

Norge (NO)

Polska (PL)

Polska (PL)

Schweiz (DE)

Schweiz (DE)

Singapore (EN)

Singapore (EN)

Sverige (SV)

Sverige (SV)

Suomi (FI)

Suomi (FI)

Türkiye (TR)

Türkiye (TR)

الإمارات العربية المتحدة (AR)

الإمارات العربية المتحدة (AR)