Revolutionizing AI: New Framework Combines MORL with Normative Behaviour Learning

2025-09-04

Author: Siti

AI's Growing Role in Our Lives

Artificial intelligence (AI) is increasingly woven into the fabric of our daily existence, taking on roles once reserved for humans. From self-driving cars to smart urban planning, AI is becoming indispensable. At the heart of these innovations lie autonomous agents capable of making decisions without human oversight. To navigate the unpredictable real world effectively, these agents require robust machine learning (ML) capabilities for adaptive behavior.

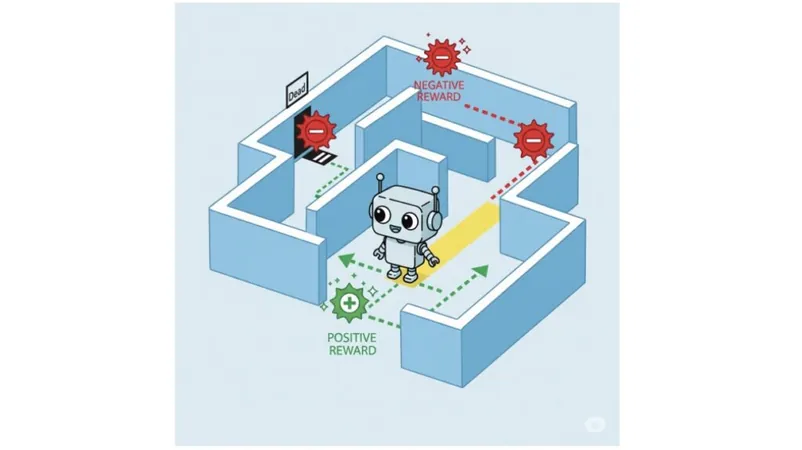

Reinforcement Learning: A Game Changer

One of the most impactful ML techniques is reinforcement learning (RL), a method that trains agents to optimize their actions in various environments. By interacting with their surroundings and receiving rewards or penalties based on their choices, RL agents gradually learn to maximize their overall rewards. They've demonstrated remarkable capabilities, outperforming even expert humans in tasks ranging from video games to controlling complex systems like self-driving cars.

The Need for Ethical and Normative Compliance

However, power without constraints can lead to undesirable outcomes. When RL agents operate in proximity to humans, it's essential that their actions align with social, legal, and ethical norms. A self-driving car could adhere strictly to safety protocols but behave in socially unacceptable ways, like weaving through traffic rudely, heightening the risk of human error.

Beyond Basic Safety: Understanding Norms

Norms encompass a broader spectrum of obligations, permissions, and prohibitions, framing ideal behavior rather than just factual statements. They introduce complexities that traditional safe RL methods, which utilize temporal logic, struggle to manage. For instance, driving regulations often involve conditional norms—like stopping for pedestrians at crossings—that are intricate and must be respected sometimes while addressing violations of more overarching regulations.

Introducing Normative Restraining Bolts (NRBs)

To tackle the challenges of normative behavior in RL agents, researchers have proposed the Restraining Bolt method, which influences agent behavior to align with established norms while also pursuing their objectives. This technique modifies the agent's learning process by adding rewards for actions that comply with specific norms, even introducing norms like contrary-to-duty obligations (CTDs). However, this approach can sometimes lead to suboptimal behaviors, including procrastination.

A Novel Solution: Ordered Normative Restraining Bolts (ONRBs)

Building on the framework of NRBs, the latest innovation is the Ordered Normative Restraining Bolt (ONRB). This approach presents norms as multi-objective reinforcement learning (MORL) goals. By reformulating the problem this way, researchers can ensure that agents minimize norm violations and correctly prioritize conflicting norms. This enhancement allows for adjustments to be made dynamically, accommodating changes in societal standards.

Real-World Applications in Assured Environments

In testing the ONRB framework, researchers applied it in a grid-world simulation where agents were tasked with resource management while adhering to complex normative scenarios. The results illustrated how agents could effectively navigate norm conflicts and update their behaviors accordingly, demonstrating their capacity to prioritize norms proactively.

Conclusion: Shaping the Future of AI

Through innovative combinations of reinforcement learning and logical frameworks, we now have the potential to develop AI agents that not only perform tasks efficiently but do so within ethical and normative bounds. This groundbreaking work has garnered recognition as a distinguished paper at IJCAI 2025, spotlighting the potential for AI to work 'right' as much as 'well.' Keep an eye on this emerging research area—it's set to change our interaction with technology for the better.

Brasil (PT)

Brasil (PT)

Canada (EN)

Canada (EN)

Chile (ES)

Chile (ES)

Česko (CS)

Česko (CS)

대한민국 (KO)

대한민국 (KO)

España (ES)

España (ES)

France (FR)

France (FR)

Hong Kong (EN)

Hong Kong (EN)

Italia (IT)

Italia (IT)

日本 (JA)

日本 (JA)

Magyarország (HU)

Magyarország (HU)

Norge (NO)

Norge (NO)

Polska (PL)

Polska (PL)

Schweiz (DE)

Schweiz (DE)

Singapore (EN)

Singapore (EN)

Sverige (SV)

Sverige (SV)

Suomi (FI)

Suomi (FI)

Türkiye (TR)

Türkiye (TR)

الإمارات العربية المتحدة (AR)

الإمارات العربية المتحدة (AR)