Revolutionary AI Model Translates Text Commands into Robot and Avatar Motion

2025-05-08

Author: Ming

Meet MotionGlot: The Future of Robotics!

In an exciting breakthrough, researchers at Brown University have unveiled MotionGlot, a cutting-edge AI model that transforms simple text commands into dynamic movements for robots and animated figures. This innovative technology mirrors the capabilities of AI text generators like ChatGPT, but it translates words into physical actions!

How Does It Work?

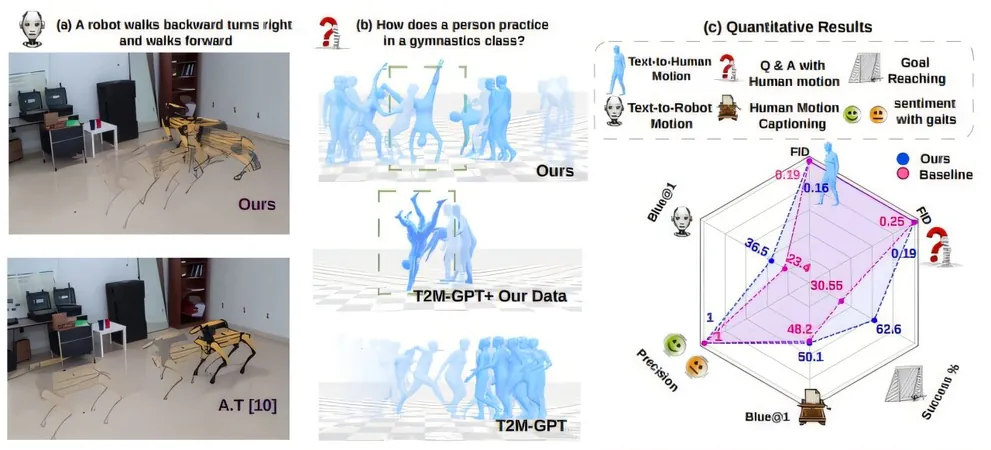

Imagine typing a straightforward command like "walk forward a few steps and take a right." With MotionGlot, that text becomes a reality—the AI generates precise motion sequences that can be understood by various robotic embodiments, from humanoid robots to four-legged avatars.

"We're treating motion as simply another language," explains Sudarshan Harithas, the Ph.D. student leading the project. "Just as we can translate between spoken languages, we’re now able to convert text-based commands into actions across different robot types." This opens up a universe of potential applications!

A New Language for Motion

The breakthrough lies in treating motion like a language. The AI employs a method similar to how large language models operate, breaking down movements into discrete 'tokens.' For instance, the position of a robot's legs during walking can be tokenized, allowing the AI to predict subsequent movements fluidly.

However, translating motions between different forms presents unique challenges. A human walking and a dog trotting are both engaging in 'walking,' yet their mechanics are drastically different. MotionGlot adeptly navigates this complexity, ensuring the correct movements are generated regardless of the robot's shape or structure.

Data-Driven Learning

To train this revolutionary model, the Brown researchers utilized extensive datasets rich in annotated motion data. One dataset, QUAD-LOCO, captures various actions performed by quadruped robots, while another, QUES-CAP, focuses on real human movements with detailed textual annotations.

Thanks to this comprehensive training, MotionGlot produces accurate motions even for commands it hasn't explicitly encountered before. Whether instructed to make a robot walk backward, turn left, or convey a joyful stride, the AI performs impressively.

The AI That Moves!

But it's not just about directives; MotionGlot can even respond to inquiries using movement. For example, ask it to demonstrate cardio activity, and you'll see a person jogging on screen!"

A Bright Future Ahead

As co-author and computer science professor Srinath Sridhar notes, "These models thrive on massive data collection. The more data we gather, the more versatile and powerful our AI can become." With ongoing advancements, the future of robotics and animated figures utilizing natural language processing looks incredibly promising!

Brasil (PT)

Brasil (PT)

Canada (EN)

Canada (EN)

Chile (ES)

Chile (ES)

Česko (CS)

Česko (CS)

대한민국 (KO)

대한민국 (KO)

España (ES)

España (ES)

France (FR)

France (FR)

Hong Kong (EN)

Hong Kong (EN)

Italia (IT)

Italia (IT)

日本 (JA)

日本 (JA)

Magyarország (HU)

Magyarország (HU)

Norge (NO)

Norge (NO)

Polska (PL)

Polska (PL)

Schweiz (DE)

Schweiz (DE)

Singapore (EN)

Singapore (EN)

Sverige (SV)

Sverige (SV)

Suomi (FI)

Suomi (FI)

Türkiye (TR)

Türkiye (TR)

الإمارات العربية المتحدة (AR)

الإمارات العربية المتحدة (AR)