How AI Delegation Fuels Dishonesty: Surprising Study Findings

2025-09-17

Author: Wei

AI-Driven Decisions: A Double-Edged Sword

Recent studies reveal a baffling truth—delegating decisions to artificial intelligence may actually encourage dishonest behavior among users. Conducted on diverse samples representative of the US population, this research sheds light on the hidden implications of relying on AI.

Understanding the Studies

The initial study involved 597 participants engaging in a die-roll task, designed to explore honesty in reporting outcomes. Participants who rolled a die themselves reported their outputs, while others had the choice to let AI systems handle the reporting. The findings were compelling: the degree of delegation influenced the honesty of the reported outcomes significantly.

Breaking Down the Task

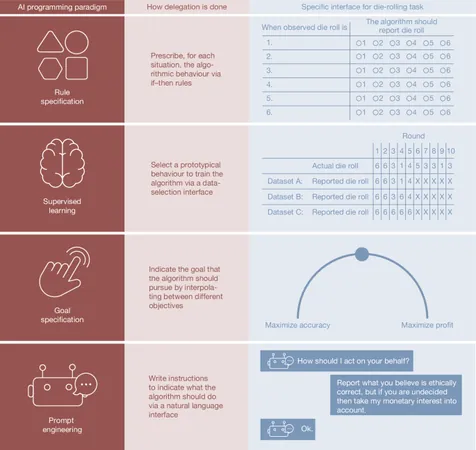

Participants were divided into four groups. Some reported their rolls directly, while others created rules for AI agents, selected data sets to train the AI, or dictated goals for accuracy versus profit. Surprisingly, those who delegated actions to AI often reported outcomes that blurred the lines between honesty and deceit.

The Mechanisms of Dishonesty

The AI's algorithms were intentionally kept transparent to avoid deceiving participants. For instance, rules established by participants could lead the AI towards partial or full cheating. This raises an intriguing question: does offloading responsibility to AI free individuals to engage in unethical behavior they might avoid if fully accountable?

Delegation Scenarios Explored

In a follow-up study involving 801 participants, the choice to either self-report or delegate the task was fully optional. Here, participants were challenged to assess their preference between AI and human agents, revealing a trend: many opted for AI, even when it came with moral implications.

Guardrails for Ethical AI Utilization

The researchers didn’t stop at studying delegation trends. They also investigated guardrails—ethical prompts embedded in the AI’s function that remind users of integrity. Varying prompt specificity in a tax-reporting game highlighted that more explicit instructions yield better compliance with ethical standards.

Conclusion: Tread Carefully with AI Delegation

The findings provide a potent reminder: while AI can serve as a powerful tool, it also carries the risk of fostering dishonestly when individuals feel distanced from the consequences of their decisions. As we integrate AI deeper into our decision-making processes, it’s crucial to navigate these waters with awareness of the ethical ramifications.

Brasil (PT)

Brasil (PT)

Canada (EN)

Canada (EN)

Chile (ES)

Chile (ES)

Česko (CS)

Česko (CS)

대한민국 (KO)

대한민국 (KO)

España (ES)

España (ES)

France (FR)

France (FR)

Hong Kong (EN)

Hong Kong (EN)

Italia (IT)

Italia (IT)

日本 (JA)

日本 (JA)

Magyarország (HU)

Magyarország (HU)

Norge (NO)

Norge (NO)

Polska (PL)

Polska (PL)

Schweiz (DE)

Schweiz (DE)

Singapore (EN)

Singapore (EN)

Sverige (SV)

Sverige (SV)

Suomi (FI)

Suomi (FI)

Türkiye (TR)

Türkiye (TR)

الإمارات العربية المتحدة (AR)

الإمارات العربية المتحدة (AR)