Bridging the Gap: How Teaching Robots to Learn Like Humans is Revolutionizing AI

2024-09-17

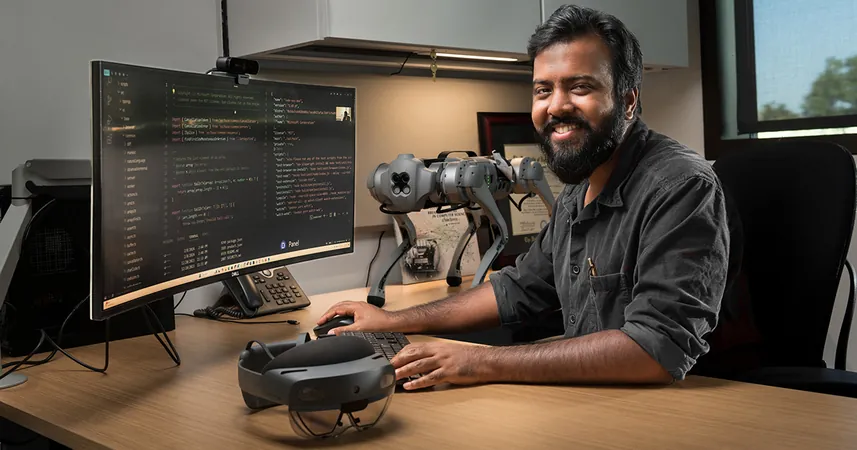

Author: John Tan

In a groundbreaking initiative at Purdue University, researchers are embarking on a quest that mirrors the early development stages of human infancy: teaching robots to navigate complex physical environments just as babies do. While human infants inherently learn through exploration and interaction with their surroundings, artificial intelligence systems often start as blank slates, devoid of any practical understanding of the world.

According to Aniket Bera, an associate professor of computer science and an AI specialist at the university, "A robot needs to interact with the world." The challenge lies in enabling robots to learn through experience, a feature embedded in human cognition but largely absent in traditional machine learning models. This research seeks to fill that crucial gap in AI development, which is vital for creating autonomous systems that can adapt and function successfully in real-world scenarios.

The Intricacies of Learning Through Movement

Just as a baby discovers the world by grasping their parent's hair or removing toys from a bucket, Bera's team is guiding robots through similar foundational experiences. By enhancing robots' ability to understand their physical surroundings, their applications could expand significantly, from wilderness rescues to efficient food delivery systems.

This journey requires teaching robots basic concepts like shape recognition, linguistic understanding, and the ability to respond to commands—integral skills that enable them to process and react to their environment. The Institute for Physical Artificial Intelligence at Purdue is spearheading this initiative, capitalizing on existing knowledge to advance the future of AI.

From Physics to Dance: Lessons in Coordination

Humans intuitively grasp the principles of physics, understanding how to predict the behavior of objects without having formal training in fluid dynamics or parabolic motion. This intuition allows for everyday tasks like catching a ball or pushing a swing. However, for robots, each of these actions requires extensive programming.

Bera emphasizes the complexity of modeling motion for AI, stating, "Robots traditionally fail to extrapolate knowledge to different contexts." This inability leads to notable shortcomings in areas like facial recognition, where algorithms struggle to identify faces beyond their training data—often leading to biases that compromise effectiveness.

The challenge extends to more artistic expressions, such as dance. While dancing may not seem essential for robots, mastering this skill can provide valuable insights into human movement and interaction. Bera's team is leveraging motion-capture technology to analyze various dance styles, aiding robots in understanding rhythm, coordination, and the dynamics inherent in human movements.

Sensorial Evolution: Enhancing Navigation in Real-Time

Navigational tasks pose a significant hurdle for robots, especially in unstructured environments where traditional GPS systems fall short. In scenarios like disaster response or crowded settings, it is crucial for robots to not only detect obstacles but also anticipate the movement of people around them.

Bera's research is focused on enabling robots to process multiple visual inputs—from their own sensors to external cameras—mimicking the nuanced way humans perceive their environment. This capability could be the difference between effective navigation in a chaotic scene versus a robot stuck in an endless loop of collision with obstacles.

The Future: Learning from Humans

As robots are designed to learn more like humans, they face the daunting task of simulating complex human behaviors, decision-making processes, and emotional understanding. The quest is not just about programming; it's about fostering smart systems capable of emotional intelligence, which is essential for tasks ranging from caregiving to emergency assistance.

In essence, Bera summarizes the vision: "If robots can comprehend human emotions, behaviors, and environments, they can become more adaptive and successful in their roles." The implications of this research could redefine not just how we interact with machines, but also how machines might coexist and support human endeavors in the near future.

As we continue to unravel the potential of AI, the journey to raise robots capable of truly understanding and engaging with their surroundings is just beginning. The development of these intelligent systems carries the power to transform everyday life drastically, from enhancing personal robotics to revolutionizing industries worldwide. Stay tuned, as the future of AI is poised to be more human-like than ever before!

Brasil (PT)

Brasil (PT)

Canada (EN)

Canada (EN)

Chile (ES)

Chile (ES)

España (ES)

España (ES)

France (FR)

France (FR)

Hong Kong (EN)

Hong Kong (EN)

Italia (IT)

Italia (IT)

日本 (JA)

日本 (JA)

Magyarország (HU)

Magyarország (HU)

Norge (NO)

Norge (NO)

Polska (PL)

Polska (PL)

Schweiz (DE)

Schweiz (DE)

Singapore (EN)

Singapore (EN)

Sverige (SV)

Sverige (SV)

Suomi (FI)

Suomi (FI)

Türkiye (TR)

Türkiye (TR)