AI Comes to the Rescue: Can Generative Technology Smash Vaccine Myths?

2025-04-16

Author: Arjun

A New Ally in the Fight Against Vaccine Misinformation

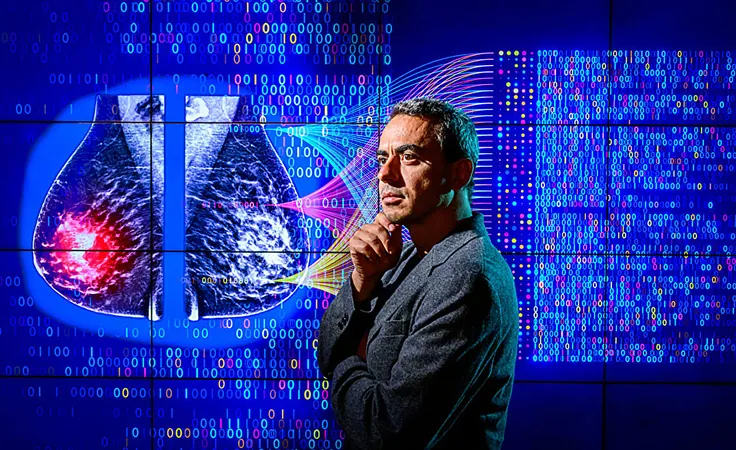

In an unexpected move, public health experts are tapping into the power of artificial intelligence (AI) to tackle the rampant misinformation surrounding vaccines. A groundbreaking study from the University of Michigan, led by assistant professor Hang Lu, reveals thrilling insights into how AI-generated, personality-specific messages could transform the landscape of vaccine communication.

Tailored AI Messages: A Game Changer?

Rather than relying on generic fact-checks that often fall flat, Lu's research employed ChatGPT—an advanced AI from OpenAI. The goal was to create bespoke messages that resonate with individuals based on their unique personality traits, such as extraversion. These personalized communications maintained the essential information while enhancing emotional and stylistic appeal, making the content more relatable.

Shocking Results: AI vs. Traditional Approaches

In an eye-opening interview with The Michigan Daily, Lu disclosed that the message targeting extraverts performed admirably, often outperforming professionally crafted corrections. However, the surprise twist came when messages aimed at dispelling pseudoscientific vaccine beliefs not only floundered but also had the potential to backfire. Lu concluded that while AI can aid in generating focused responses, it's not a be-all, end-all solution.

A Powerful Collaboration: AI and Healthcare Professionals

Neha Bhomia, a programmer analyst and adjunct lecturer at Michigan Medicine, envisions a future where generative AI and healthcare providers work hand in hand to elevate patient care. In her view, the idea of personalizing public health messaging based on personality is both innovative and crucial, arguing that vaccine hesitancy often stems from a deeper emotional and trust-based divide rather than mere lack of information.

Filling Gaps in Healthcare: The Promise of AI

Bhomia passionately believes that AI can bridge significant gaps in healthcare services—especially where there’s a shortage of providers. She argues that AI could enhance communication across language barriers, reach underserved communities, and offer tailored support grounded in data insights. Yet she stresses that ethical design and supervision are essential to ensure AI serves to build trust, not erode it.

The Cautious Voice: Is AI Overstepping?

Despite the excitement surrounding AI’s potential, concerns about excessive reliance on technology loom large. Jon Zelner, an epidemiology professor at the university, cautions that such AI-driven methods may neglect structural healthcare issues. He believes that when core problems like lack of health insurance or historical mistrust in systems are overlooked, AI simply glosses over the real challenges at hand.

Human Connection: The Heart of Public Health?

Zelner expresses a poignant concern—the rush to adopt AI risks diminishing the human connection vital to effective healthcare. He advocates for a more traditional approach: deploying public health nurses who can engage directly with communities harboring high levels of vaccine hesitancy, addressing concerns on a personal level. Although this strategy may require a more significant financial investment, he argues the eventual returns in vaccination rates would more than justify the cost.

In summary, while generative AI offers exciting possibilities for personalized public health messaging, experts urge caution. The journey to effectively counter vaccine misinformation may be best achieved through a blend of technology and human touch.

Brasil (PT)

Brasil (PT)

Canada (EN)

Canada (EN)

Chile (ES)

Chile (ES)

Česko (CS)

Česko (CS)

대한민국 (KO)

대한민국 (KO)

España (ES)

España (ES)

France (FR)

France (FR)

Hong Kong (EN)

Hong Kong (EN)

Italia (IT)

Italia (IT)

日本 (JA)

日本 (JA)

Magyarország (HU)

Magyarország (HU)

Norge (NO)

Norge (NO)

Polska (PL)

Polska (PL)

Schweiz (DE)

Schweiz (DE)

Singapore (EN)

Singapore (EN)

Sverige (SV)

Sverige (SV)

Suomi (FI)

Suomi (FI)

Türkiye (TR)

Türkiye (TR)

الإمارات العربية المتحدة (AR)

الإمارات العربية المتحدة (AR)