Why Memory Might Be the Secret Superpower of Algorithms

2025-07-13

Author: Ting

A Surprising Discovery in Computational Theory

On a July afternoon in 2024, theoretical computer scientist Ryan Williams set out to disprove a bold idea he had about the relationship between time and memory in computing. His initial finding suggested that even a small amount of memory could be just as valuable as a significant amount of time for executing computations. Touted as improbable, he assumed he must be mistaken and put his proof aside. But hours of scrutiny revealed no flaws; in fact, the implications of his theory began to dawn on him.

"I thought I was losing my mind," Williams said, reflecting on his unexpected findings. After further investigation and feedback from fellow researchers, he published his groundbreaking proof online to tremendous acclaim.

Unveiling the Power of Memory

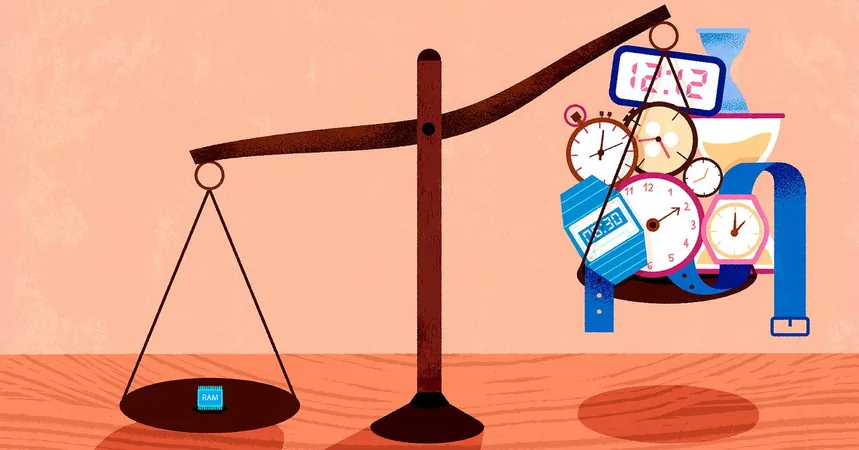

In the realm of computing, time and memory (or space) are paramount resources. Traditionally, it was accepted that algorithms necessitated space proportionate to their runtime. Williams' proof challenged this notion, establishing an innovative method for transforming any algorithm into a version that required significantly less space.

This revelation not only answered long-awaited questions but also hinted at a new approach for solving one of computer science's enduring enigmas: the P vs. PSPACE problem, concerning the efficiency and resource demands of various algorithms.

The Mind Behind the Breakthrough

At 46, Williams enjoys a creative workspace at MIT, where he has taken a unique approach to his environment, even using yoga mats as makeshift reading corners. His journey began far from the hallowed halls of academia on a 50-acre farm in Alabama, where a young Ryan first encountered computers at just seven years old. The fascination quickly transformed into a passion for theoretical computer science as he delved into concepts of computational complexity.

Drawn to the intricacies of computational theory, he honed his focus on how to mathematically categorize the resources needed to solve complex problems.

Historical Context and Key Contributions

The historical foundation for understanding time and space complexity was laid by pioneers like Juris Hartmanis and Richard Stearns in the 1960s, who created essential definitions that researchers still rely on today. The complexity classes they defined paved the way for future explorations into the stark discrepancies between time and space.

For decades, the belief was that space could not significantly surpass time in computational prowess. But Williams' research has dramatically shifted this perspective, raising the prospect that space is indeed the more powerful resource.

The Breakthrough That Could Change Everything

Before Williams' groundbreaking work, connections between time and space were largely theoretical. However, the shift began when landscape-changing researchers like Stephen Cook and Ian Mertz explored memory efficiency in algorithms, ultimately leading to the emergence of Williams' ‘squishy pebbles’ concept—demonstrating that data storage isn’t rigidly constrained as previously thought.

This new method allowed Williams to develop an algorithm that utilized memory in a way previously deemed impossible, proving that less memory could result in slower computations but could still unlock previously unattainable solutions.

A Closer Look at the Implications

Williams' findings suggest a profound truth in computational theory: certain problems cannot be tackled without exceeding time limits, establishing a tangible difference between the computational utility of memory versus time. While many see it as a potential paradigm shift, Williams remains cautious yet optimistic about the future.

In a field where the gaps between power and constraints are razor-thin, his research offers the keys to unlock more efficient algorithms. As the boundaries of complexity theory expand, one can't help but wonder just how far this exploration of memory may lead.

The Road Ahead

Williams' journey has only begun, and although he has made a landmark discovery, the quest to fully understand the complexities of memory and time in algorithms is far from over. With fresh ideas and renewed perspectives flooding the space, the next generation of technologically-driven minds may soon follow in his footsteps.

As researchers continue to grapple with the fundamental question of P vs. PSPACE, what other mysteries of computation might they unravel? The universe of algorithms is vast, and it seems the best is yet to come.

Brasil (PT)

Brasil (PT)

Canada (EN)

Canada (EN)

Chile (ES)

Chile (ES)

Česko (CS)

Česko (CS)

대한민국 (KO)

대한민국 (KO)

España (ES)

España (ES)

France (FR)

France (FR)

Hong Kong (EN)

Hong Kong (EN)

Italia (IT)

Italia (IT)

日本 (JA)

日本 (JA)

Magyarország (HU)

Magyarország (HU)

Norge (NO)

Norge (NO)

Polska (PL)

Polska (PL)

Schweiz (DE)

Schweiz (DE)

Singapore (EN)

Singapore (EN)

Sverige (SV)

Sverige (SV)

Suomi (FI)

Suomi (FI)

Türkiye (TR)

Türkiye (TR)

الإمارات العربية المتحدة (AR)

الإمارات العربية المتحدة (AR)