Revolutionizing Cosmic Mapping: Speed Meets Precision!

2025-09-16

Author: Ying

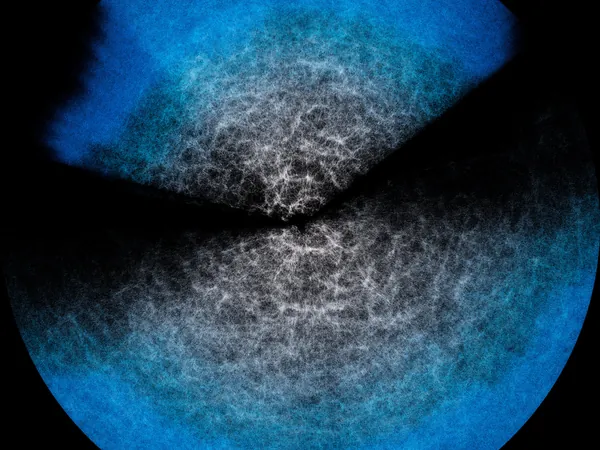

Think galaxies are vast? They’re mere specks in the grand tapestry of the universe! This colossal expanse, made up of countless galaxies, forms clusters that meld into superclusters, all woven into a stunning cosmic web peppered with voids. Understanding this immense structure can feel overwhelming, but groundbreaking developments are making it a whole lot simpler.

To navigate this vastness, scientists blend physics with advanced astronomical data, crafting models like the Effective Field Theory of Large-Scale Structure (EFTofLSS). These models, fueled by observations, statistically map the cosmic web and help estimate its fundamental parameters. However, the intricate calculations involved demand huge computational resources, particularly as datasets grow at an astonishing rate.

Enter emulators—an ingenious solution that dramatically speeds up the analysis without sacrificing accuracy. But how do they stack up against traditional models? An international research team, including institutions from Italy and Canada, has recently published a game-changing study on their emulator Effort.jl in the Journal of Cosmology and Astroparticle Physics. The findings reveal that Effort.jl matches the precision of its more cumbersome counterpart, and sometimes even surpasses it, all while operating in minutes on standard laptops instead of requiring supercomputers!

Marco Bonici, a leading researcher from the University of Waterloo, likens this to analyzing water at a molecular level. While it’s theoretically fascinating to dissect every atomic interaction, the exponential growth in computational demands makes such detailed examinations practically unfeasible. Instead, models like EFTofLSS encapsulate vital microscopic behaviors to describe the macroscopic flow of the universe.

Traditional modeling relies on feeding vast amounts of observational data into complex algorithms, which can be time-consuming and resource-intensive. But with the astronomical data boom from current and upcoming surveys like DESI and Euclid, seeking efficient alternatives has become paramount.

This is where Effort.jl shines. Its core is a sophisticated neural network trained on the model's outputs. After thorough training, it can predict outcomes based on unseen parameter combinations, drastically reducing both time and resource needs.

What sets Effort.jl apart is its innovative approach to training, integrating pre-existing knowledge about how certain parameters shift predictions. This allows the emulator to operate efficiently with significantly fewer data examples and even run on consumer-grade hardware.

The study doesn't leave anything to chance; it rigorously validates Effort.jl’s predictions against both simulated and real data. The results are promising! Effort.jl produces results that closely align with traditional models, even retaining essential elements that would typically be omitted to hasten calculations.

As we gear up for the treasure trove of data from DESI and Euclid, Effort.jl is poised to be an invaluable tool, enhancing our understanding of the universe's grand structure at a rapid pace. The future of cosmic exploration is here, and it’s faster and more accurate than ever!

Brasil (PT)

Brasil (PT)

Canada (EN)

Canada (EN)

Chile (ES)

Chile (ES)

Česko (CS)

Česko (CS)

대한민국 (KO)

대한민국 (KO)

España (ES)

España (ES)

France (FR)

France (FR)

Hong Kong (EN)

Hong Kong (EN)

Italia (IT)

Italia (IT)

日本 (JA)

日本 (JA)

Magyarország (HU)

Magyarország (HU)

Norge (NO)

Norge (NO)

Polska (PL)

Polska (PL)

Schweiz (DE)

Schweiz (DE)

Singapore (EN)

Singapore (EN)

Sverige (SV)

Sverige (SV)

Suomi (FI)

Suomi (FI)

Türkiye (TR)

Türkiye (TR)

الإمارات العربية المتحدة (AR)

الإمارات العربية المتحدة (AR)