Is Your Doctor's Message Coming from A.I.? Shocking Truth Revealed!

2024-09-25

Introduction

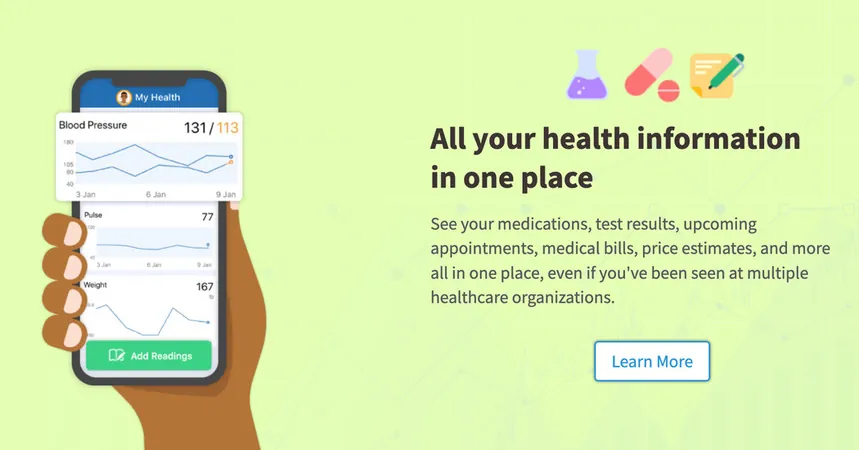

In the age of technology, patients are increasingly turning to online platforms like MyChart to communicate with their doctors. Every day, countless individuals describe their ailments, share symptoms, and ask for medical advice through these digital channels, believing they are speaking to their healthcare providers directly.

The Emergence of A.I. in Medical Communication

However, a startling trend is emerging: many of the responses patients receive might not be entirely from their doctors. Instead, a growing number of clinicians—about 15,000 across more than 150 health systems—are utilizing a new artificial intelligence feature integrated into MyChart, which assists in drafting replies to patient messages. This revelation raises critical questions about the trust patients place in these communications.

Lack of Transparency

According to interviews with officials at various health care systems, there has been a noticeable lack of transparency about the use of A.I.-generated content in responses. This has become a point of concern among medical experts, who argue that reliance on this technology can lead to grave missteps in clinical responses. The merging of A.I. into medical communication risks compromising patient safety and the integrity of doctor-patient relationships.

Historical Context of A.I. in Healthcare

Traditionally, the health care industry applied A.I. predominantly for administrative tasks, such as summarizing notes and managing insurance claims. Critics now fear that tools like MyChart's A.I. drafting feature might encroach upon clinical decision-making, which should be rooted in human judgment.

Impacts of the COVID-19 Pandemic

During the COVID-19 pandemic, as in-person medical visits became scarce, the reliance on messaging platforms surged. The A.I. feature, known as In Basket Art, utilizes information from a patient’s prior messages along with data from their electronic medical records to generate drafts for doctors. This innovative approach aims to save time but has sparked debate about its effectiveness and safety.

Effectiveness and Transparency of A.I. in Healthcare

While early studies indicate that this technology may help reduce healthcare professionals' feelings of burnout, it does not consistently save time. Many clinicians across esteemed health systems—including U.C. San Diego Health, Stanford Health Care, and U.N.C. Health—have begun using the A.I.-based tool, yet each institution independently determines how to test its efficacy and whether to disclose its use to patients.

Disclaimers and Ethical Concerns

Some systems, like U.C. San Diego Health, provide a disclaimer at the end of A.I. messages, indicating they were "generated automatically" and subsequently reviewed by a physician. Dr. Christopher Longhurst, the health system's chief clinical and innovation officer, advocates for transparency, stating, "I see, personally, no downside to being transparent." This sentiment contrasts sharply with other health systems that fear informing patients about the involvement of A.I. may lead to feelings of betrayal.

The Risks of Automation Bias

The A.I. technology is designed to mimic the doctor’s voice closely, but this capability has raised ethical concerns. Research shows that humans often exhibit "automation bias," meaning they may inadvertently trust A.I. suggestions even if they contradict their professional knowledge. This creates a potential for significant errors in patient communications.

Errors and Misinformation

A study highlighting the risks found that A.I.-generated drafts displayed inaccuracies, including erroneous information about patient vaccinations. Dr. Vinay Reddy, a family medicine physician, recounted a patient being incorrectly assured that she had received her hepatitis B vaccine in an A.I. message when, in fact, she had not.

Bioethical Implications

Furthermore, the ongoing debates extend beyond safety; bioethicists question the overarching implications of using A.I. in the doctor-patient dynamic. "Is this how we want to use A.I. in medicine?" asks Daniel Schiff from Purdue University. Unlike other applications of A.I. that seek to enhance clinical outcomes, tools like Art threaten to diminish genuine human interaction in an already strained healthcare environment.

Conclusion

Ultimately, as A.I. continues to infiltrate clinical settings, patients must remain vigilant and informed about who—and what—is truly behind their medical communications. The future of healthcare may very well hinge on finding the right balance between technological advancement and maintaining the essential human elements of care. Will your doctor’s next message be from them, or from a machine? The answer could change everything.

Brasil (PT)

Brasil (PT)

Canada (EN)

Canada (EN)

Chile (ES)

Chile (ES)

España (ES)

España (ES)

France (FR)

France (FR)

Hong Kong (EN)

Hong Kong (EN)

Italia (IT)

Italia (IT)

日本 (JA)

日本 (JA)

Magyarország (HU)

Magyarország (HU)

Norge (NO)

Norge (NO)

Polska (PL)

Polska (PL)

Schweiz (DE)

Schweiz (DE)

Singapore (EN)

Singapore (EN)

Sverige (SV)

Sverige (SV)

Suomi (FI)

Suomi (FI)

Türkiye (TR)

Türkiye (TR)