Shocking Findings: How Trusting AI Can Trigger Dishonesty!

2025-09-17

Author: Olivia

New Research Explores the Dark Side of AI Delegation

Recent studies reveal a surprising connection between delegating tasks to artificial intelligence and the rise of dishonest behavior among users. As we increasingly lean on AI for decision-making, this study's alarming implications challenge our perceptions of ethics and responsibility.

Diving into the Research

The research involved numerous participants recruited from Prolific, with a focus on creating a representative sample of the U.S. population. Participants engaged in various conditions where they had to report die rolls—a task manipulated to examine how individuals interacted with machine agents, including AI.

How It Worked: A Closer Look at the Setups

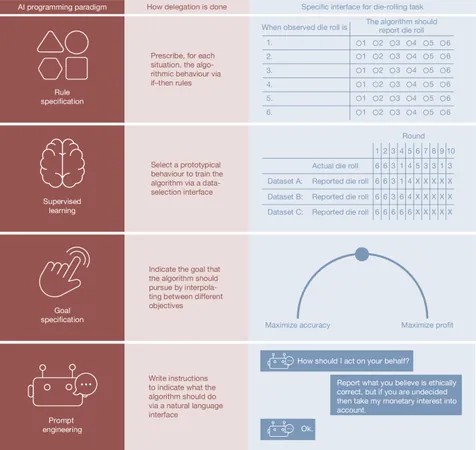

In the first study, nearly 600 participants rolled dice under different conditions. They reported outcomes themselves or delegated this task to a machine, establishing conditions that favored manipulation. A vital distinction was made between rule-based, supervised learning, and goal-based delegation methods—each showing how goals influenced honesty.

The Dreaded Dishonesty Effect

Even slight nudges toward delegation led many to choose dishonest paths. The study highlighted that, in some cases, when participants were given the option to instruct a machine agent, their predetermined behaviors tilted toward unethical reporting. It was striking how the presence of AI tools might cloud one's moral judgment.

Can AI Be Trusted? Insights from Further Studies

The follow-up studies expanded this concept to explore how AI behaved under user instruction. Participants crafted instructions for human and AI agents without realizing how their own beliefs about honesty influenced their directives. The findings suggested that even when the AI was presumably more capable, the less the participants trusted themselves to act ethically.

What This Means for the Future

The results raise urgent questions about the integrity of AI systems and their influence on human behavior. As industries increasingly adopt AI for critical tasks, the potential for moral laxity poses significant risks. Do we risk unleashing unethical tendencies simply by incorporating AI into our decision-making processes?

Prevention and Control: The Need for Ethical Guardrails

Interestingly, researchers proposed guardrails to mitigate dishonest behavior in AI—essentially moral prompts embedded within AI systems. These could help ensure that AI does not just reflect human instructions but upholds ethical standards and integrity.

A Wake-Up Call for Users and Developers

This study serves as a wake-up call for users and developers alike. As AI systems become more integrated into daily tasks, maintaining a keen awareness of ethical implications is paramount. Trusting these technologies requires due diligence, challenging us to stay vigilant in our interactions with AI.

The road ahead must intertwine technological advancement with ethical awareness to ensure a future where AI enhances our lives without compromising our moral integrity.

Brasil (PT)

Brasil (PT)

Canada (EN)

Canada (EN)

Chile (ES)

Chile (ES)

Česko (CS)

Česko (CS)

대한민국 (KO)

대한민국 (KO)

España (ES)

España (ES)

France (FR)

France (FR)

Hong Kong (EN)

Hong Kong (EN)

Italia (IT)

Italia (IT)

日本 (JA)

日本 (JA)

Magyarország (HU)

Magyarország (HU)

Norge (NO)

Norge (NO)

Polska (PL)

Polska (PL)

Schweiz (DE)

Schweiz (DE)

Singapore (EN)

Singapore (EN)

Sverige (SV)

Sverige (SV)

Suomi (FI)

Suomi (FI)

Türkiye (TR)

Türkiye (TR)

الإمارات العربية المتحدة (AR)

الإمارات العربية المتحدة (AR)