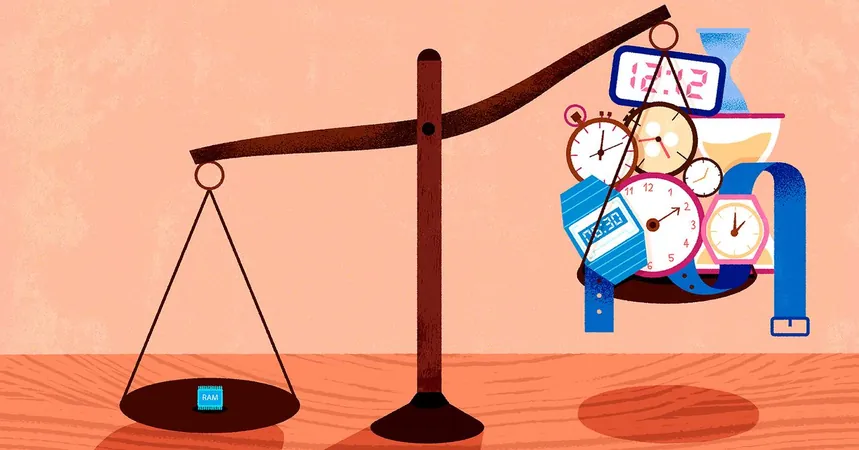

Memory vs. Time: Why It’s the Real Powerhouse in Computing

2025-07-13

Author: Charlotte

In a groundbreaking revelation, Ryan Williams, a theoretical computer scientist at MIT, recently unveiled a proof that suggests memory, or space, is far more powerful than time in the realm of computation. This radical notion challenges long-standing beliefs in computer science and could reshape our understanding of algorithms.

A Journey of Discovery

On a fateful afternoon in July 2024, Williams embarked on a quest to disprove a startling conclusion he had made two months prior. He believed that a minuscule amount of memory could significantly enhance computational power—potentially replacing the need for extensive processing time. Skeptical yet curious, he delved into his proof, confident he would uncover an error. Hours later, to his astonishment, he found none.

The Power of Space

This revelation set Williams on a path of rigorous exploration that culminated in a proof shared widely in the academic community, sparking excitement across the field. Avi Wigderson, a prominent theoretical computer scientist, lauded the work, emphasizing its aesthetic and intellectual beauty.

Traditionally, algorithm efficiency is tied to time and memory requirements. Up until now, the thought was that these two resources were intertwined—greater spatial requirements meant more time spent processing. Williams' proof, however, enabled the transformation of any algorithm into a form that drastically cut memory usage without sacrificing integrity.

A Chain Reaction

Williams also uncovered an extraordinary implication: a powerful result regarding computational limitations tied to time. This finding not only aligns with theoretical expectations but positions Williams' work at the forefront of solving one of the longest-standing puzzles in the field of computer science—establishing a clear distinction between what can and cannot be computed based on time and space.

From Alabama to MIT

Growing up on a 50-acre farm in rural Alabama, Williams' journey into the world of computers began at age seven during a coding workshop. The mesmerizing digital fireworks he created ignited a passion that would drive him into theoretical computer science. Educated at the Alabama School of Math and Science and later at Cornell University, Williams' pursuit of knowledge led him to discover the intricate balance between time and space that has captivated his career.

A Legacy of Innovation

Pioneering efforts in computational complexity theory date back to experts like Juris Hartmanis who helped establish the foundational definitions of time and space in the late 20th century. Williams stands on the shoulders of these giants, advancing the understanding of how space can be reutilized effectively—a concept rooted in the very fabric of theoretical computation.

Breaking Through Barriers

After decades of experimentation, Williams' breakthrough embraces a novel simulation method. This technique proposes that certain algorithms can perform significantly well with minimal memory while revealing that some problems cannot be solved within the confines of a limited timeframe.

What Lies Ahead?

As Williams reflects on his findings, he acknowledges the daunting challenge that remains: proving the broader implications of this newfound power of memory. With a fresh perspective on computation and new questions on the horizon, there's potential for monumental advancements in our capabilities.

This adventure in mathematical exploration not only highlights the elegance of computational theory but lays the groundwork for future innovation—one that could redefine our approach to algorithms and their applications.

Brasil (PT)

Brasil (PT)

Canada (EN)

Canada (EN)

Chile (ES)

Chile (ES)

Česko (CS)

Česko (CS)

대한민국 (KO)

대한민국 (KO)

España (ES)

España (ES)

France (FR)

France (FR)

Hong Kong (EN)

Hong Kong (EN)

Italia (IT)

Italia (IT)

日本 (JA)

日本 (JA)

Magyarország (HU)

Magyarország (HU)

Norge (NO)

Norge (NO)

Polska (PL)

Polska (PL)

Schweiz (DE)

Schweiz (DE)

Singapore (EN)

Singapore (EN)

Sverige (SV)

Sverige (SV)

Suomi (FI)

Suomi (FI)

Türkiye (TR)

Türkiye (TR)

الإمارات العربية المتحدة (AR)

الإمارات العربية المتحدة (AR)